This article is part of an editorial series of Expert Talks by Virtuos, aimed at sharing the learnings and best practices in global game development and art production. In this installment, CounterPunch – A Virtuos Studio’s Jay Aschenbrenner, Technical Director, and Mike Montague, Rigging Director, share their experience working on real-time animation projects, namely Enchant Santa Calls.

Established in 2011 and part of Virtuos since 2020, CounterPunch – A Virtuos Studio is a 3D animation studio in Los Angeles that offers a variety of content production services ranging from facial and body animation, modeling, rigging, episodic and real-time pipeline support. For over a decade now, our CounterPunch team has worked on both pre-rendered and real-time projects on all platforms.

In this Expert Talks installment, we speak with Jay Aschenbrenner, Technical Director, and Mike Montague, Rigging Director at CounterPunch, and delve into how real-time animation can be applied to create better content assets and produce richer experiences for users.

Jay Aschenbrenner (left) and Mike Montague (right)

This festive season, Jay and Mike also took a short walk down the memory lane with Santa Calls, CounterPunch’s real-time facial animation project with Enchant Christmas back in 2020.

What Is Real-Time Animation?

Real-time animation is the process of receiving animation data and applying it to a character in almost real-time. To create real-time animation, motion capture is applied directly to a character through a retargeting system, allowing users to observe it as an end project, evaluate its quality for further fine-tuning, or clean it up to become pre-rendered or canned animation.

The results of real-time animation are instant, and there is no animator involved in the process, allowing for a live experience that can be run in a game engine. According to Jay, our technical director at CounterPunch, it is also possible to stream data into digital content creation (DCC) tool like Maya to support the sculpting of shapes or animation – this could be done with skeletal animation, but also with real-time point cloud data from a depth camera.

What Are Some of the Exciting Use Cases of Real-Time Animation?

Jay shared that real-time animation is often used on set so that directors can see the results of motion capture actors, which gets cleaned up later to be used as normal animation. “This helps to produce an early cut for dailies directly from the shoot and helps with visualization of where characters appear in the world, as well as the checking of shot composition, blocking, and mesh deformation.”

Mike, our rigging director at CounterPunch, gave an example of real-time animation coming into use. In addition to character animation seen in The Mandalorian, virtual cameras were driven by tracking real camera movements to allow for computer graphics (CG) background elements to be recorded on a live set. Such technological developments allow for more complex foreground and background interaction and compositing in real-time, he said.

Jay remarked that people are likely to have interacted with real-time animation assets in their daily lives more than they think.

Outside of the motion capture world, real-time animation can be used to create fun and social user experiences.

“Most of us would have seen or used some of the popular augmented reality (AR) apps that attach dog ears or layer cartoony features to faces with filters,” Jay pointed out. “Those apps are driven by real-time technology.”

Case Study: Enchant Santa Calls

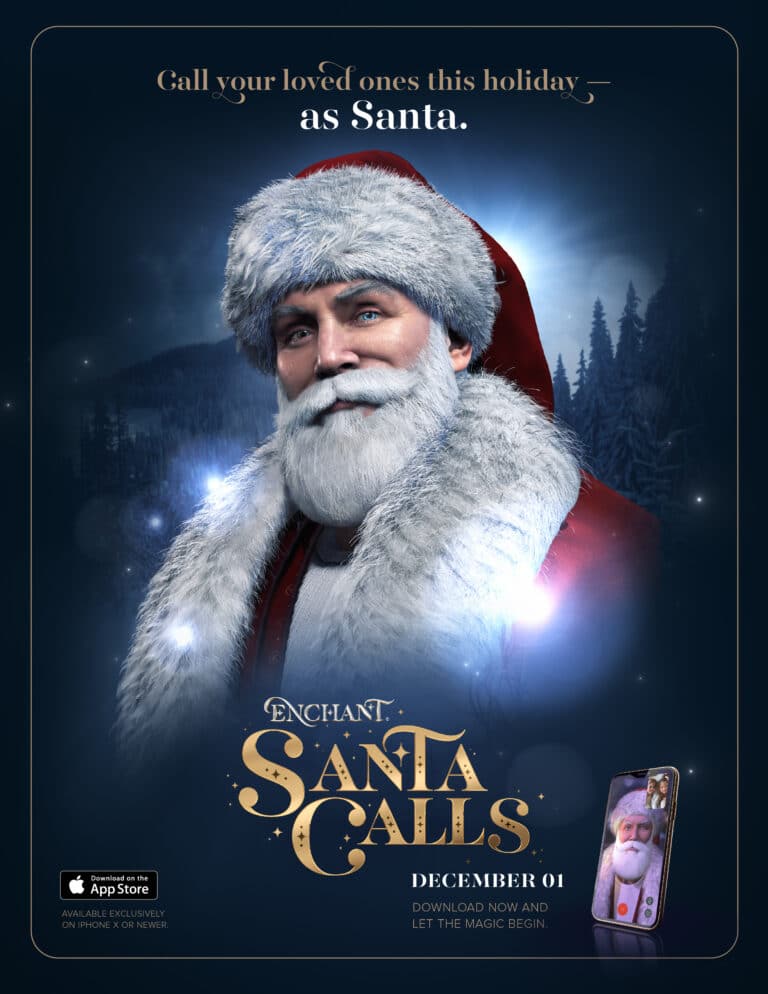

The CounterPunch team is no stranger when it comes to developing various apps and experiences using real-time animation technology – for instance, an Eye Moji app that shows off eye scans in high detail. In 2020, CounterPunch also developed Santa Calls, an iOS app where users can call someone as Santa through a combination of voice modulation, real-time dynamics, cloth, and interactive particle systems.

Image Courtesy of Enchant Labs

Project Background

The COVID-19 pandemic had struck hard last year in 2020, not only foiling business plans but greatly impacting people’s lives. Many were forced to isolate themselves for their safety and were unable to return home to visit their families during the holiday season. The team at Enchant Labs, which originally planned to have their Enchant Christmas as a live event, decided to adapt their business model to create Santa Calls, an app that allows users to call someone Santa using cutting-edge 3D animation technology and facial recognition.

To create Santa Calls in time for the festive season, Enchant Labs needed a partner with the skills, experience, and aptitude to develop the medium that could deliver this experience to their customers, which CounterPunch brought to the table.

Objective

“We were tasked to create multiple photorealistic characters with mobile restriction on the Unity platform,” Jay shared. “To create a believable experience for call receivers, the project utilized a combination of canned animation with AR Kit facial tracking and real-time physics simulation.” The team at CounterPunch which worked on the Santa Calls project had a broad range of expertise from modeling, rigging, animation, to technical art.

Before receiving the Enchant Santa Calls project, CounterPunch had already been utilizing ways to drive characters in real-time in the asset creation portion in Maya. This method would allow the team to tweak the face rig to the real-time performance in Maya before putting it in an engine.

Challenges and Solutions

While CounterPunch was familiar with creating real-time animation assets, there were a few areas that the team had less working knowledge about concerning running the assets on the Unity engine. “We had a lot of experience doing the overall process in Unreal Engine, but this was the first time we’ve ported that knowledge over to Unity,” Jay explained, as the project was meant to run on Unity as part of Enchant Labs’ requirements.

One of the features which was needed but missing in Unity was the ability to snapshot poses in the animation graph to create branching animation logic. To resolve this, the team worked with a developer to recreate this feature on Unity. In the process of recreating this feature on Unity, Jay shared that the team chose to incorporate details such as some subtle breathing, a camera rotation which was based on the user’s phone in the engine, and further improved on how to work with animating hair outside of Unreal Engine’s groom system.

One of the pitfalls that CounterPunch was careful to avoid was the “uncanny valley”, wherein this case certain facial features that get animated in real-time could end up appearing inconsistent due to a momentary loss of facial tracking and break the user’s immersion. The feature recreated on Unity would resolve this by including several fail-safe mechanisms in the case of the loss of facial tracking. “This feature allows us to blend real-time data with pre-animated data, so if you lost track of the character, it would simply return to an idle state, or if you didn’t blink for a long period, the character would simply do it himself,” Jay explained. “One of the reasons why we did this was so that we wouldn’t upset any children with distortions caused by the loss of facial tracking.”

The team also encountered a crisis in the middle of the project when one of the facial tracking APIs which was being used for the Santa Calls app was acquired by another company, forcing them to switch the whole project to another API. Jay shared that it was a challenge to suddenly uproot the project, but the team was still able to adapt to the abrupt change and keep the project on track while making the best out of the situation. “It sounds scary, but it turned out fine as we were eventually able to understand the differences between the two systems,” Jay said. “We were able to reuse the poses that we made previously and add a few more to increase the quality, with a new facial tracking system.”

Delivered a Successful Product

The Enchant Labs team was pleased with the final product delivered by CounterPunch, having overcome a huge hurdle by putting a technology – which had been mostly available to use on high-end PCs – to mobile.

The partnership with CounterPunch has been amazing; we accomplished so much in such little time that it feels like we have been partners forever.

“The communication, processes, and collaboration when working together on any problems or features were fantastic,” said Hassan Rahim, Director at Enchant Labs, in a testimonial for CounterPunch’s work on Enchant Santa Calls.

As for CounterPunch, delivering Santa Calls to users during the festive period amidst the pandemic was a very fruitful project for the team.

“I’m glad we were able to bring some Christmas cheer to people during a difficult time of isolation. Technology can bring people together,” Jay reflected.

Future Plans for CounterPunch and Real-Time Animation Capabilities

Jay and Mike were both optimistic about the future developments of real-time animation capabilities on the horizon. Mike stressed that real-time animation as a tool will be increasingly important in the industry, and will require even more complex and photorealistic characters as consumers demand better quality. “Our artists and engineers will need to develop tools and techniques to streamline the process of creating characters and iterating fast, to execute the most demanding projects. Our facial rigging tools will continue to improve to leverage the latest technology as it develops,” Mike said.

Jay was excited about developments in technology such as Microsoft HoloLens, which hinted at the possibility of a virtual space, for instance, having real-time point clouds and real-time meshing to create a 3D environment that people could traverse in. He noted that Fortnite had already acquired Hyprsense, which enables game avatars to make facial animations based on a single webcam. “This is something that we can already accomplish at CounterPunch, and I hope to get the opportunity to implement this in future projects,” Jay added.

Jay (right) at work in a motion capture suit

To further strengthen CounterPunch’s efficiency, Jay shared that he is focusing on making real-time animation assets more accessible, with the goal for animators to be able to fire up a system and shoot their mocaps at home. With such convenience, animators will be able to work on projects remotely and ensure a smoother workflow for the team.

In addition to CounterPunch’s deep expertise in facial rigging and animation, Mike also shared that their capabilities have compounded since joining Virtuos. “Virtuos brings a larger workforce that we can rely on to produce larger and more complex projects, and I’m hopeful that the vast amount of technology that we can leverage from the Virtuos’ arsenal of tools will be very beneficial to CounterPunch’s projects,” Mike said. The team is already working on several exciting projects, as Mike revealed that they are currently implementing an improved hair solution for an upcoming major title.

With a world of possibilities out there for real-time animation tools as the virtual space continues to grow, it is no doubt that CounterPunch and the rest of Virtuos fully intend to leverage them to deliver the best animation assets for games.